The current technology landscape is defined by a frantic gold rush. The prize? “Superintelligence,” or Artificial General Intelligence (AGI) – an AI that matches or surpasses human cognitive abilities. The leading players in this race – the Googles, Microsofts, and OpenAIs of the world- are sparing no expense to get there first. But are they thinking about Sustainable AI?

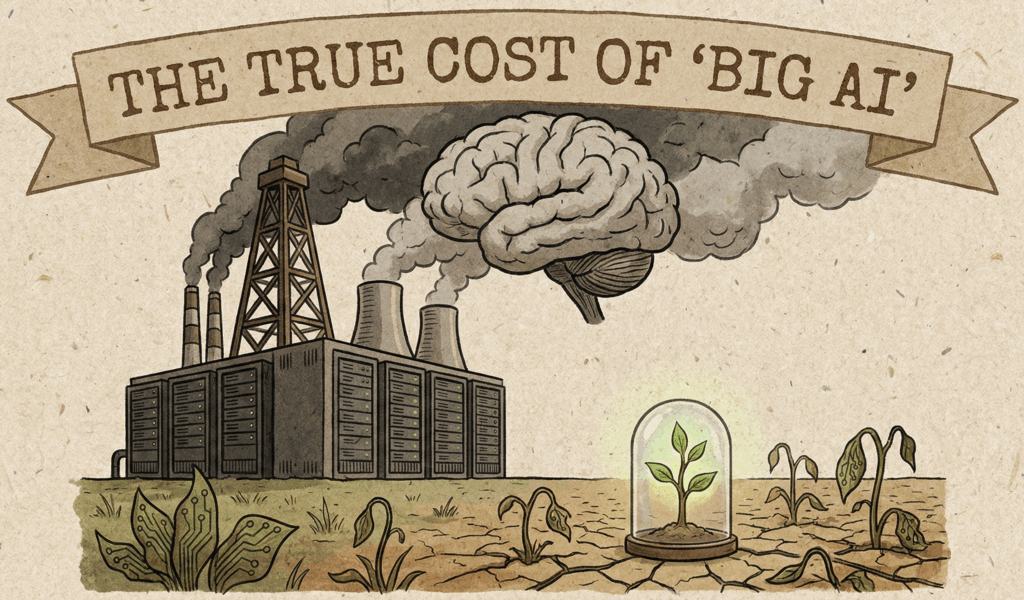

The hype cycle spins faster, an uncomfortable reality is emerging from the shadows of these sleek digital products. In their single-minded quest for scale, these corporations are adopting a playbook that echoes the early days of “Big Oil”: build massive infrastructure, extract resources relentlessly, and worry about environmental externalities later.

At Parishkaar, we believe technology should solve problems, not create catastrophic new ones. It is time to examine the true cost of “Big AI.”

The Carbon Footprint of not having Sustainable AI

We often think of AI as ethereal – code existing in the “cloud.” But the cloud is a physical reality made of steel, silicon, and cooling systems, all demanding colossal amounts of electricity. The race for bigger models requires bigger data centers, and the scale of proposed infrastructure is becoming hard to fathom.

Consider the current trajectory of the industry leaders:

- Meta is reportedly planning a data center footprint that rivals the geographic size of Manhattan, says Mark Zuckenberg himself.

- OpenAI‘s ChatGPT produces the same amount of CO2 emissions as 260 flights from New York City to London each month, a study finds.

- xAI, Elon Musk’s initiatve is currently facing legal disputes related to its Memphis data center. To meet its immense power needs, the facility has relied on gas turbines that critics allege are worsening air pollution, exacerbating health issues for vulnerable local residents.

This isn’t just about future projections; it is an on-the-ground reality of resource consumption that is rapidly outpacing sustainable energy development.

The “Stadium Lights” Syndrome: A Massive Inefficiency

The environmental toll might be justifiable if every watt of energy was being used to cure diseases or solve complex climate models. But it isn’t.

The current paradigm relies on massive, general-purpose Large Language Models (LLMs). We are using models trained with the energy demands of a small city to perform trivial tasks – like writing a limerick, generating a meme, or figuring out what to cook for dinner.

It is an inefficiency comparable to turning on all the stadium lights at a sports arena just to find a lost set of keys in the parking lot.

Research is beginning to quantify this waste. Recent studies have demonstrated that using a massive, general-purpose LLM to answer a simple factual question – such as, “What is the capital of Canada?” – can consume up to 30 times more energy compared to using a smaller, task-specific model designed for information retrieval.

We are using computational bazookas to swat flies, and the planet is paying for the ammunition.

The Economic Moat: Sidelining Innovation

The environmental crisis of AI feeds directly into an innovation crisis.

The immense energy requirements of state-of-the-art models translate into immense financial costs. If competing in the AI space requires building multi-billion dollar data centers and securing dedicated power plants, who can afford to play?

Only a handful of Big Tech giants have the capital to sustain this burn rate. This creates an insurmountable economic moat. Startups with brilliant ideas for specialized AI, academic researchers wanting to explore alternative architectures, and nonprofits seeking to use AI for social good are effectively sidelined.

We risk a future where the direction of a technology that will impact billions of lives is dictated solely by three or four corporate boardrooms, driven by the necessity to recoup massive infrastructure investments rather than the genuine needs of society.

The Path Forward: Smarter, Sustainable AI

The analogy to Big Oil is meant as a warning. We cannot afford to repeat the mistakes of the industrial revolution in the digital age.

The future of AI shouldn’t belong solely to brute-force scaling. The next great breakthrough shouldn’t just be a bigger model; it should be a more efficient one. We need a pivot toward small language models (SLMs), specialized AI agents, and energy-efficient hardware architectures that can bring the benefits of AI without burning down the house to fuel the furnace.

At Parishkaar, we believe in technology that is sustainable, equitable, and smart. The current path of “Big AI” is failing those tests. It’s time to demand better, and demand Sustainable AI. We discuss more on small AI with examples in our next post. Stay tuned.